Data Science and Artificial Intelligence fans, this might be a good day for you. Google DeepMind Cofounder gives a teenage AI fan pieces of advice, and I think you should know that too!

Some artificial intelligence specialists at organizations like Google and Facebook are currently acquiring more cash than venture financiers at Goldman Sachs and J.P. Morgan.

These specialists additionally have the benefit of working in a field of technology that is ready to majorly affect the world we live in.

Be that as it may, for some individuals, it's not clear how to approach landing a job in AI.

Some artificial intelligence specialists at organizations like Google and Facebook are currently acquiring more cash than venture financiers at Goldman Sachs and J.P. Morgan.

These specialists additionally have the benefit of working in a field of technology that is ready to majorly affect the world we live in.

Be that as it may, for some individuals, it's not clear how to approach landing a job in AI.

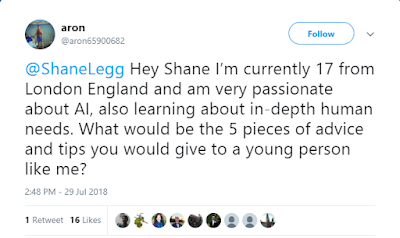

This week, 17-year-old Londoner Aron Chase asked Shane Legg — the chief scientist and cofounder of DeepMind, an AI lab acquired by DeepMind for a reported £400 million — for five pieces of advice for an AI enthusiast like himself.

"Hey Shane I’m currently 17 from London England and am very passionate about AI, also learning about in-depth human needs. What would be the 5 pieces of advice and tips you would give to a young person like me?"

To Chase's surprise, Legg replied, telling him to learn linear algebra well, calculus to an ok level, theory and stats to a good level, the basics in theoretical computer science, and how to code well in Python and C++. He also encouraged him to read and implement machine learning papers, and to "play with stuff!".

Now, that's personally great for Artificial intelligence enthusiasts who want to begin their journey in this field.

Personally, coding in C++ really essential because most of the frameworks are built upon this fast language. Be it Tensorflow, PyTorch, MlPack or anything at all, they all utilize the speed C++ offers. Moreover, the requirement of math is pretty obvious. (This is a statement which you will get a hang of the moment you enter your study of K Nearest Neighbors (In Machine Learning) or Deep Learning forward propagation (in Deep Learning).

As for getting the hang of implementing Machine Learning papers, I still have to do it myself, so can't say about that :P

Anyways, that's all for this post, Do pay heed to this advice!

Uddeshya Singh

Comments

Post a Comment